Out of the Weeds, Part III

In this series of articles, our goal has been to demystify some of the common buzzwords being used in our industry and show how they are relevant and practical to consumer insights.

In case you missed the first installment in this series- machine learning is a branch of artificial intelligence that automates analytical model building, where systems can learn from data and identify patterns.

This third installment is all about decision trees, how they are used, and the implications for consumer research.

Decision trees- a predictive modeling approach in machine learning- use observations about a certain item to help make conclusions about the item’s target value.

You don’t need to understand how to build the models yourself to be able to utilize the power of these and other ML techniques. (hint: call us)

What Are Decision Trees?

Decision trees are a non-parametric supervised learning method used for both classification and regression (prediction) tasks. Non-parametric simply means that fewer assumptions are made about the population, or rather the data is not required to fit a normal distribution.

That is not meant to imply that such models completely lack parameters, but that the number and type of parameters are flexible and not pre-fixed. Non-parametric data is also often ordinal in nature.

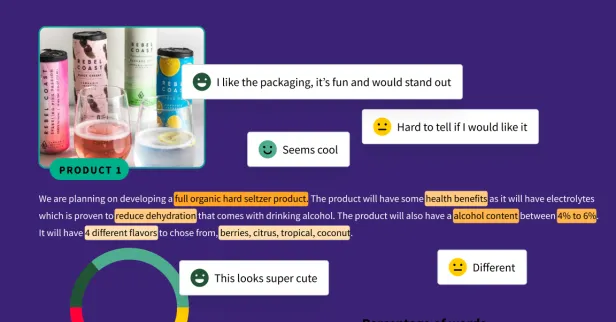

For example, a survey of consumers asking their preferences on a range from Dislike to Like (or any other type of Likert scale) would be considered ordinal data.

Supervised learning is the machine learning task of inferring an output given an existing labeled data set. Whereas unsupervised learning seeks to uncover the hidden structure/pattern within an unlabeled data set.

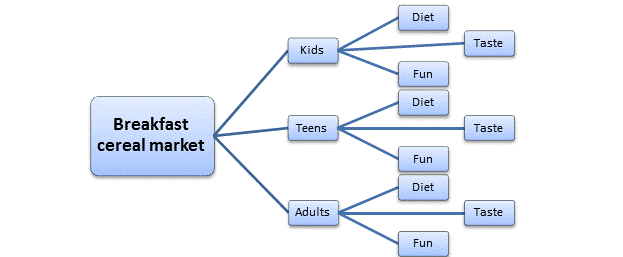

The primary goal of a decision tree algorithm is to build a model that classifies and then predicts the value of a variable or outcome by learning a series of simple rules inferred from the structure of the data. The most common “rule” is in the format of an “if/then” statement.

Decision tree algorithms are considered to be a class of powerful models for their ability to achieve a high accuracy, while also being both clear and interpretable (e.g. "we believe with a high degree of certainty that our customers will behave in this way.")

Decision trees play into our decisions as consumers at all points during the day. With some effective research, it’s possible to get a better understanding of where and how consumers navigate those choices

How do you start your day?

The tree can be as simple or as complex as the situation requires. All decision trees enable users to develop a classification system that can predict an outcome of a certain interest or topic. For example, how likely is a certain segment of consumers to make a purchase?

How Does it Work?

There are several methods used to build the actual classification system. All of them more or less accomplish the same thing: they classify and then make predictions.

The choice of a particular algorithm is largely dependent on whether you are attempting to predict a continuous variable (e.g. rating scale) or a categorical variable (e.g. gender, specific income level, etc.). Then, of course the level of complexity of the actual variable itself. A binary Yes/No is less complex than a three level categorical variable, Yes, No, Maybe.

Another way to describe a machine learning decision tree is as a Classification and Regression (C&R) Tree. Same as before, the C&R Tree algorithm generates a decision tree that allows you to predict or classify future observations.

This method uses a recursive partitioning to split the records into segments of either predicting the values of a continuous variable (regression) or predicting the values of a categorical dependent variable from one or more continuous and/or categorical predictor variables.

A C&R tree node is considered “pure” if all cases in the node fall into a specific category. The C&R Tree node input fields can be numeric or categorical, while all of the splits are binary.

For example, we may be interested in predicting who will or will not be a repeat purchaser or renew their subscription.

Another, similar type of tree building algorithm is the CHAID node method, which uses Chi Square statistics to identify ultimate splits, allowing for the splits to expand beyond two branches- perhaps a topic we can dive deeper into later!

How (and When) To Use Decision Trees

The use cases for using a decision tree based algorithm in the world of consumer insights are numerous and probably used more than you may have thought.

Among the more common applications are:

- Segmentation: Identify consumers who are likely to be influenced

- Stratification: Assign consumer segments into various categories (e.g. low, medium, high levels of loyalty)

- Prediction: Create rules to predict a related outcome (e.g. likelihood of purchase versus no purchase)

- Consumer Journey mapping: Classifications and predictions to map out a specific consumer journey

Happy Growing!