Advertising plays a critical role in shaping brand perception and driving consumer behavior. However, not all ads are created equal, and even the most creative campaigns can fall flat if they don't resonate with the target audience.

This is where ad testing comes into play. By rigorously evaluating your ads before they go live, you can ensure they effectively communicate your message and achieve your desired outcomes.

Today, we'll explore the fundamentals of ad testing and provide ten practical tips for running more effective ad tests.

What is Ad Testing & Why Do Brands Use It?

Ad testing is the process of evaluating the effectiveness of an advertisement before it is fully launched. This involves gathering feedback from a sample of your target audience to determine how well the ad performs in terms of engagement, comprehension, appeal, and persuasion. Brands use ad testing to identify potential issues, refine their messaging, and optimize their creative elements to maximize impact.

The primary goals of ad testing are to:

![]() Ensure Clarity: Determine if the ad's message is clear and easily understood.

Ensure Clarity: Determine if the ad's message is clear and easily understood.![]() Assess Emotional Response: Understand the emotional reactions the ad evokes.

Assess Emotional Response: Understand the emotional reactions the ad evokes.![]() Measure Engagement: Assess how well the ad captures and maintains attention.

Measure Engagement: Assess how well the ad captures and maintains attention.![]() Evaluate Persuasion: Evaluate the ad's effectiveness in driving desired actions, such as purchases or sign-ups.

Evaluate Persuasion: Evaluate the ad's effectiveness in driving desired actions, such as purchases or sign-ups.![]() Optimize ROI: Maximize the return on investment by refining the ad based on feedback.

Optimize ROI: Maximize the return on investment by refining the ad based on feedback.

By conducting ad tests, brands can make data-driven decisions that enhance the effectiveness of their campaigns, reduce the risk of costly mistakes, and ultimately achieve better results.

How to Test Ads

Ad testing can be approached in several ways, depending on the specific goals and resources available. Here are the common types of ad concept testing:

Monadic Testing

Respondents are shown a single ad and asked for their feedback. This approach is straightforward and avoids any potential biases from comparing multiple ads at once.

Sequential Monadic Testing

Participants are shown multiple ads, one after the other, and provide feedback on each. This method allows for comparisons while minimizing the influence of seeing all ads simultaneously.

Comparative Testing

Respondents view two or more ads side-by-side and are asked to compare them based on specific criteria. This can help identify the most effective ad among the options.

Split Testing (A/B Testing)

Two versions of an ad (A and B) are tested with different audiences to determine which version performs better. This is particularly useful for digital ads where variations can be easily deployed and measured.

Pre-Post Testing

This involves measuring key metrics before and after exposure to the ad. It helps determine the impact of the ad on brand awareness, perception, and behavior.

Ten Tips for Running Better Ad Testing

Define Clear Objectives

![]() Why it matters: Clear objectives provide direction and focus for your ad testing efforts. They help you determine what metrics to measure and what questions to ask.

Why it matters: Clear objectives provide direction and focus for your ad testing efforts. They help you determine what metrics to measure and what questions to ask.![]() How to do it: Identify what you want to achieve with your ad, such as increasing brand awareness, driving sales, or improving customer sentiment. Establish specific, measurable goals that align with these objectives.

How to do it: Identify what you want to achieve with your ad, such as increasing brand awareness, driving sales, or improving customer sentiment. Establish specific, measurable goals that align with these objectives.

Choose the Right Audience

![]() Why it matters: Testing with the right audience ensures that the feedback you receive is relevant and actionable. It reflects the opinions and preferences of your target market.

Why it matters: Testing with the right audience ensures that the feedback you receive is relevant and actionable. It reflects the opinions and preferences of your target market.![]() How to do it: Segment your audience based on demographics, psychographics, and behavioral data. Ensure your sample size is large enough to provide statistically significant results.

How to do it: Segment your audience based on demographics, psychographics, and behavioral data. Ensure your sample size is large enough to provide statistically significant results.

Craft Effective Survey Questions

![]() Why it matters: Well-designed survey questions elicit meaningful responses that provide insights into the ad's performance. Poorly crafted questions can lead to ambiguous or irrelevant feedback.

Why it matters: Well-designed survey questions elicit meaningful responses that provide insights into the ad's performance. Poorly crafted questions can lead to ambiguous or irrelevant feedback.![]() How to do it: Use a mix of open-ended and closed-ended questions. Focus on clarity, avoid leading questions, and ensure each question aligns with your testing objectives.

How to do it: Use a mix of open-ended and closed-ended questions. Focus on clarity, avoid leading questions, and ensure each question aligns with your testing objectives.

Utilize Visual and Emotional Metrics

![]() Why it matters: Ads are not just about conveying information; they also evoke emotions and visual appeal. Measuring these aspects provides a comprehensive understanding of the ad's impact.

Why it matters: Ads are not just about conveying information; they also evoke emotions and visual appeal. Measuring these aspects provides a comprehensive understanding of the ad's impact.![]() How to do it: Use tools like facial expression analysis, eye-tracking, and sentiment analysis to gauge emotional and visual responses. Combine these metrics with traditional survey data for deeper insights.

How to do it: Use tools like facial expression analysis, eye-tracking, and sentiment analysis to gauge emotional and visual responses. Combine these metrics with traditional survey data for deeper insights.

Test Across Multiple Platforms

![]() Why it matters: Ads can perform differently across various platforms due to differences in audience behavior and platform characteristics. Testing across multiple platforms ensures broader applicability of your findings.

Why it matters: Ads can perform differently across various platforms due to differences in audience behavior and platform characteristics. Testing across multiple platforms ensures broader applicability of your findings.![]() How to do it: Run tests on platforms where you plan to launch your ad, such as social media, TV, and print. Compare performance metrics to understand platform-specific strengths and weaknesses.

How to do it: Run tests on platforms where you plan to launch your ad, such as social media, TV, and print. Compare performance metrics to understand platform-specific strengths and weaknesses.

Include Benchmark Comparisons

![]() Why it matters: Benchmarking your ad against industry standards or previous campaigns provides context for your results. It helps you understand how your ad stacks up against the competition.

Why it matters: Benchmarking your ad against industry standards or previous campaigns provides context for your results. It helps you understand how your ad stacks up against the competition.![]() How to do it: Use industry benchmarks to compare key metrics like engagement, recall, and persuasion. Analyze how your ad performs relative to these benchmarks to identify areas for improvement.

How to do it: Use industry benchmarks to compare key metrics like engagement, recall, and persuasion. Analyze how your ad performs relative to these benchmarks to identify areas for improvement.

Analyze Both Quantitative and Qualitative Data

![]() Why it matters: Quantitative data provides measurable insights, while qualitative data offers context and depth. Combining both types of data leads to a more holistic understanding of ad performance.

Why it matters: Quantitative data provides measurable insights, while qualitative data offers context and depth. Combining both types of data leads to a more holistic understanding of ad performance.![]() How to do it: Collect quantitative data through surveys and metrics analysis. Gather qualitative data through open-ended questions, focus groups, and interviews. Integrate findings from both sources for a comprehensive analysis.

How to do it: Collect quantitative data through surveys and metrics analysis. Gather qualitative data through open-ended questions, focus groups, and interviews. Integrate findings from both sources for a comprehensive analysis.

Iterate Based on Feedback

![]() Why it matters: Ad testing is an iterative process. Incorporating feedback and making necessary adjustments leads to continuous improvement and better final outcomes.

Why it matters: Ad testing is an iterative process. Incorporating feedback and making necessary adjustments leads to continuous improvement and better final outcomes.![]() How to do it: Identify key areas for improvement based on test results. Make changes to the ad and retest to evaluate the impact of these adjustments. Repeat this process until you achieve optimal performance.

How to do it: Identify key areas for improvement based on test results. Make changes to the ad and retest to evaluate the impact of these adjustments. Repeat this process until you achieve optimal performance.

Monitor Real-Time Performance

![]() Why it matters: Real-time monitoring allows for timely adjustments based on current performance. It helps identify issues early and make necessary tweaks to enhance effectiveness.

Why it matters: Real-time monitoring allows for timely adjustments based on current performance. It helps identify issues early and make necessary tweaks to enhance effectiveness.![]() How to do it: Use analytics tools to track key performance indicators (KPIs) in real-time. Set up alerts for significant deviations from expected performance and be prepared to make on-the-fly adjustments.

How to do it: Use analytics tools to track key performance indicators (KPIs) in real-time. Set up alerts for significant deviations from expected performance and be prepared to make on-the-fly adjustments.

Leverage Technology and Tools

![]() Why it matters: Advanced tools and technologies can streamline the ad testing process, provide deeper insights, and enhance the accuracy of your findings.

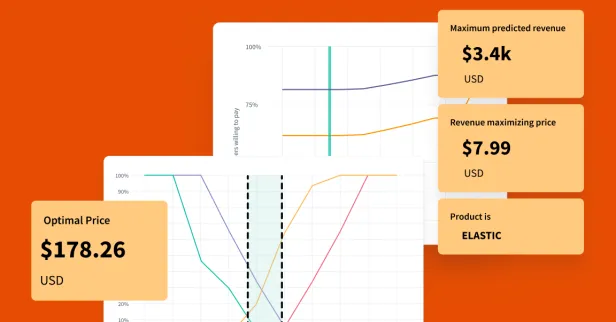

Why it matters: Advanced tools and technologies can streamline the ad testing process, provide deeper insights, and enhance the accuracy of your findings.![]() How to do it: Utilize platforms like SightX that offer comprehensive ad testing solutions. These platforms can help automate data collection, analysis, and reporting, making the testing process more efficient and effective.

How to do it: Utilize platforms like SightX that offer comprehensive ad testing solutions. These platforms can help automate data collection, analysis, and reporting, making the testing process more efficient and effective.

Ad Testing with SightX

We know how important ad testing is for marketers looking to up their ROI. By leveraging the right tools companies can make informed decisions, minimize risks, and enhance their ad campaigns.

At SightX, we infuse the power of generative AI into advanced ad testing tools so you can:

![]() Create fully customized tests and experiments with a prompt.

Create fully customized tests and experiments with a prompt.![]() Collect data from your target audience.

Collect data from your target audience.![]() Receive fully analyzed and summarized results in seconds, revealing key insights and personalized recommendations.

Receive fully analyzed and summarized results in seconds, revealing key insights and personalized recommendations.

Let us show you how simple it can be to collect powerful insights.